How to Choose Which Models to Offer Users

This guide demonstrates how to configure which AI models are available to users in Open WebUI through Ozeki AI Gateway. By controlling model availability at the gateway level, you can manage costs, ensure appropriate model selection, and provide a curated experience for your users.

What is Model Selection in Ozeki AI Gateway?

Model selection in Ozeki AI Gateway allows you to control which AI models are accessible to end users through the API url. This is particularly useful when working with free models or when you want to specify models for selected groups of users. The gateway acts as a filter, ensuring that only approved models appear in the Open WebUI model selection.

How to Choose Models to Offer Users (Quick Steps)

- Open Ozeki AI Gateway

- Verify providers

- Check configured users and API keys

- Validate existing routes

- Edit Ozeki AI Gateway provider configuration

- Toggle to allow other models

- Select specific models to offer to users

- Save the provider configuration

- Select a LLM in OpenWebUI

- Test the selected model

How to Choose Models (Video tutorial)

In this video tutorial, you will learn how to choose which models to offer users step-by-step. The video covers verifying your configuration, selecting free models from OpenRouter, and confirming they appear correctly in Open WebUI.

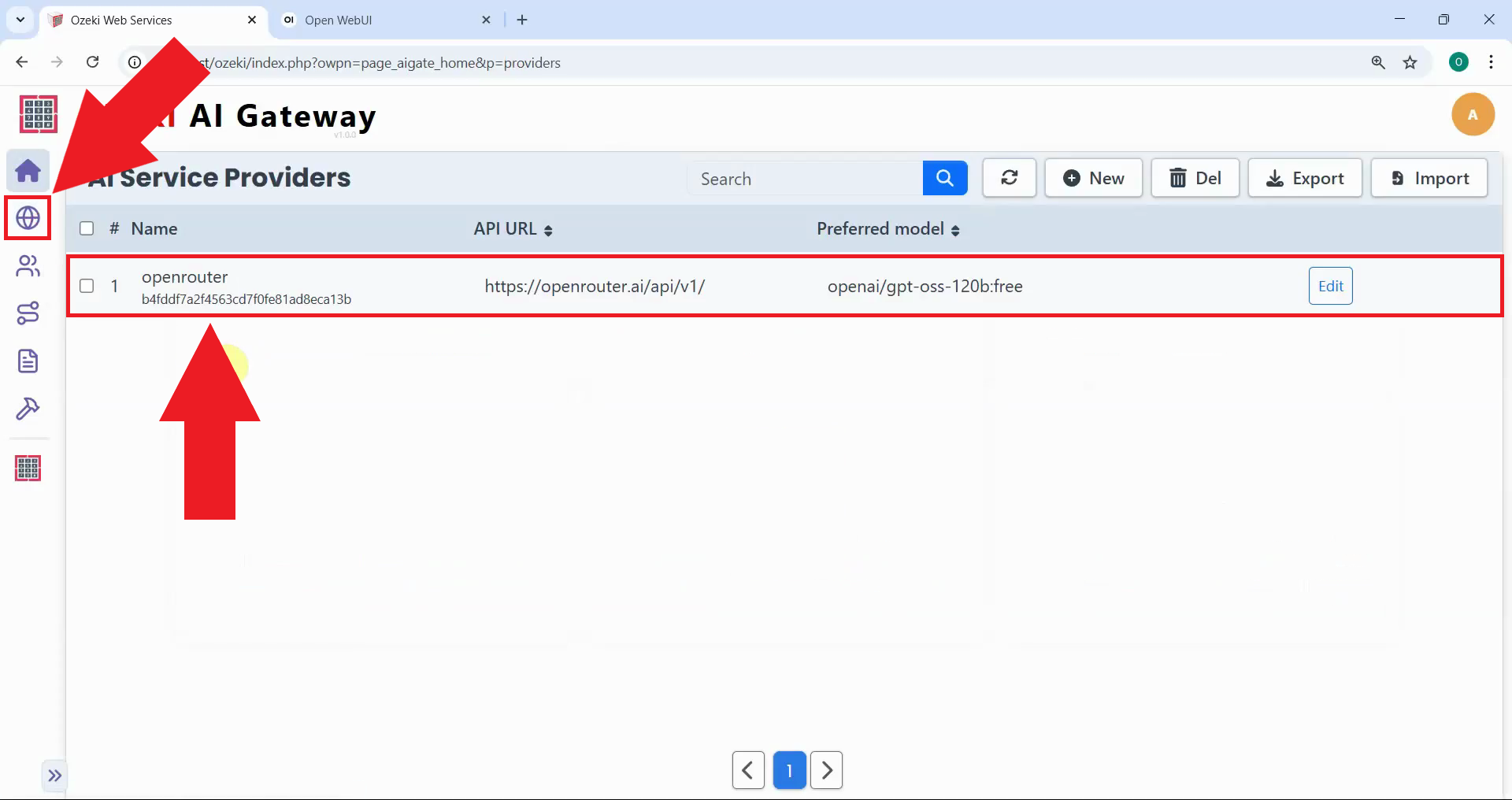

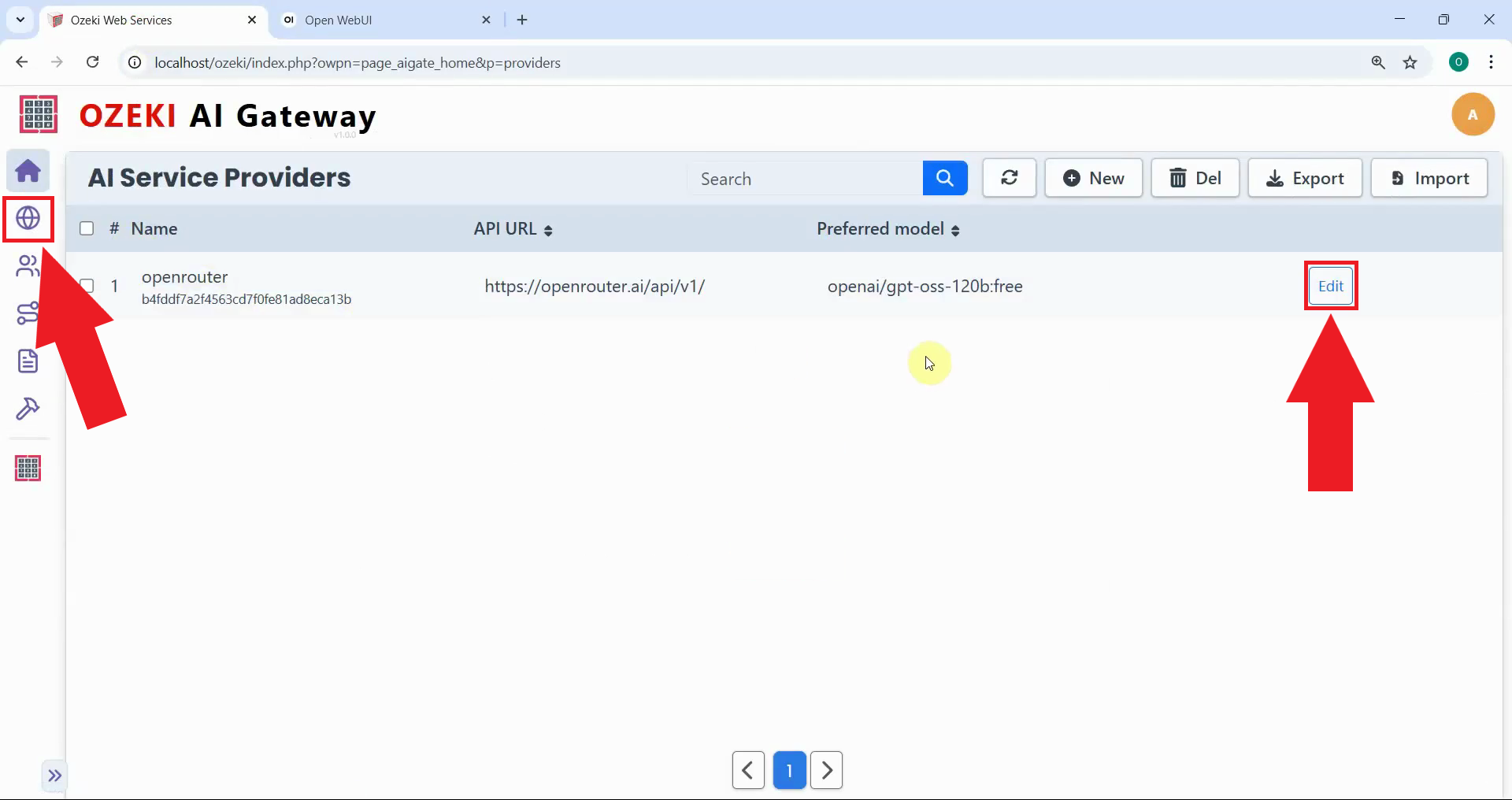

Step 1 - Verify providers

Open Ozeki AI Gateway and click "Providers" on the sidebar. Verify that your openrouter provider is properly configured with an OpenRouter API key (Figure 1).

You need a working installation of Ozeki AI Gateway with at least one provider, one Ai user, and one route configured. If you haven't set this up yet, please check out the Ozeki AI Gateway Quick Start Guide.

You need a valid OpenRouter API key configured in your Ozeki AI Gateway provider settings. If you don't have an API key, see our How to Create a Free API Key for OpenRouter guide.

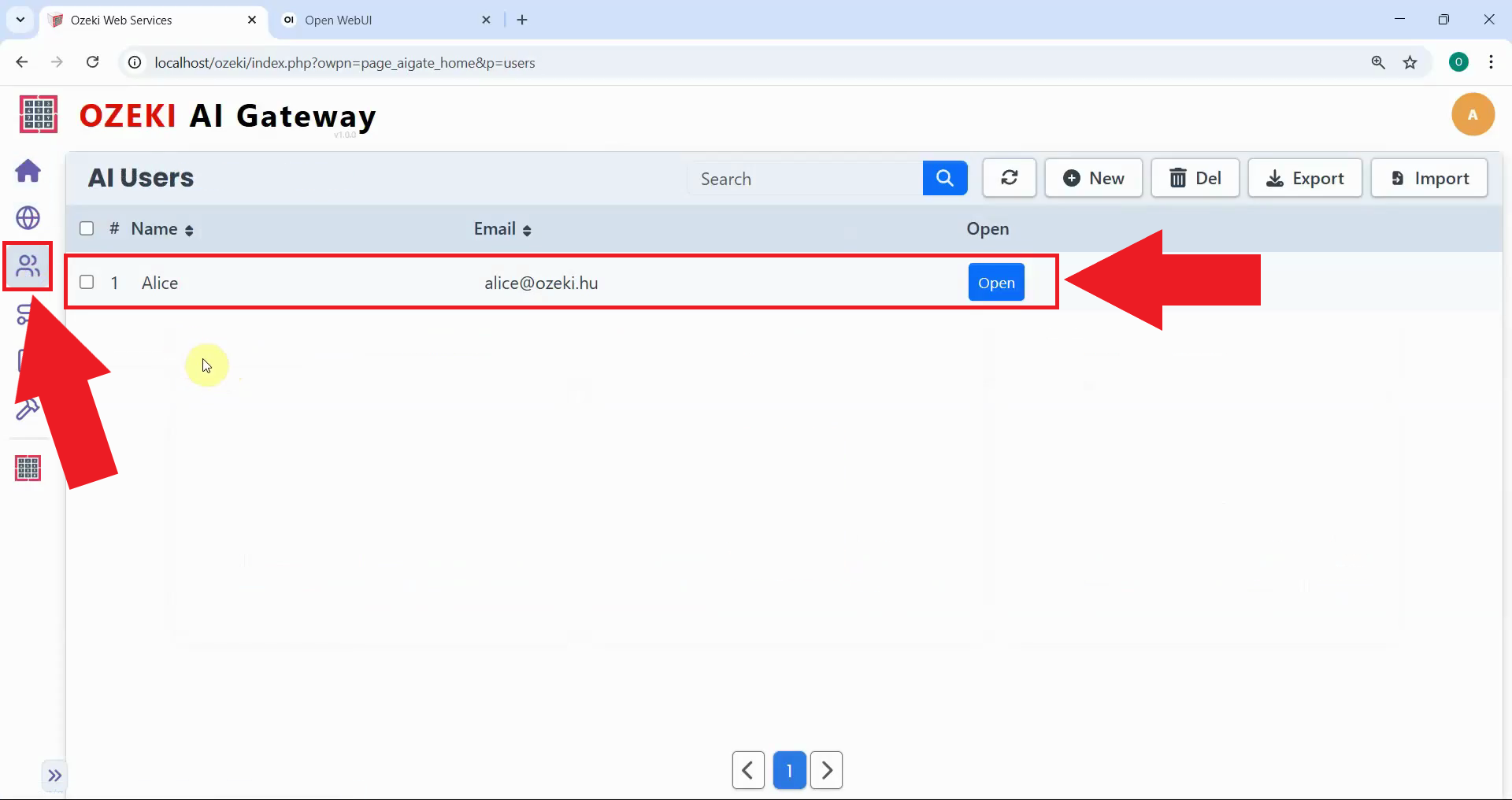

Step 2 - Check users

Navigate to the Users section and confirm that you have at least one user configured with an API key set up (Figure 2).

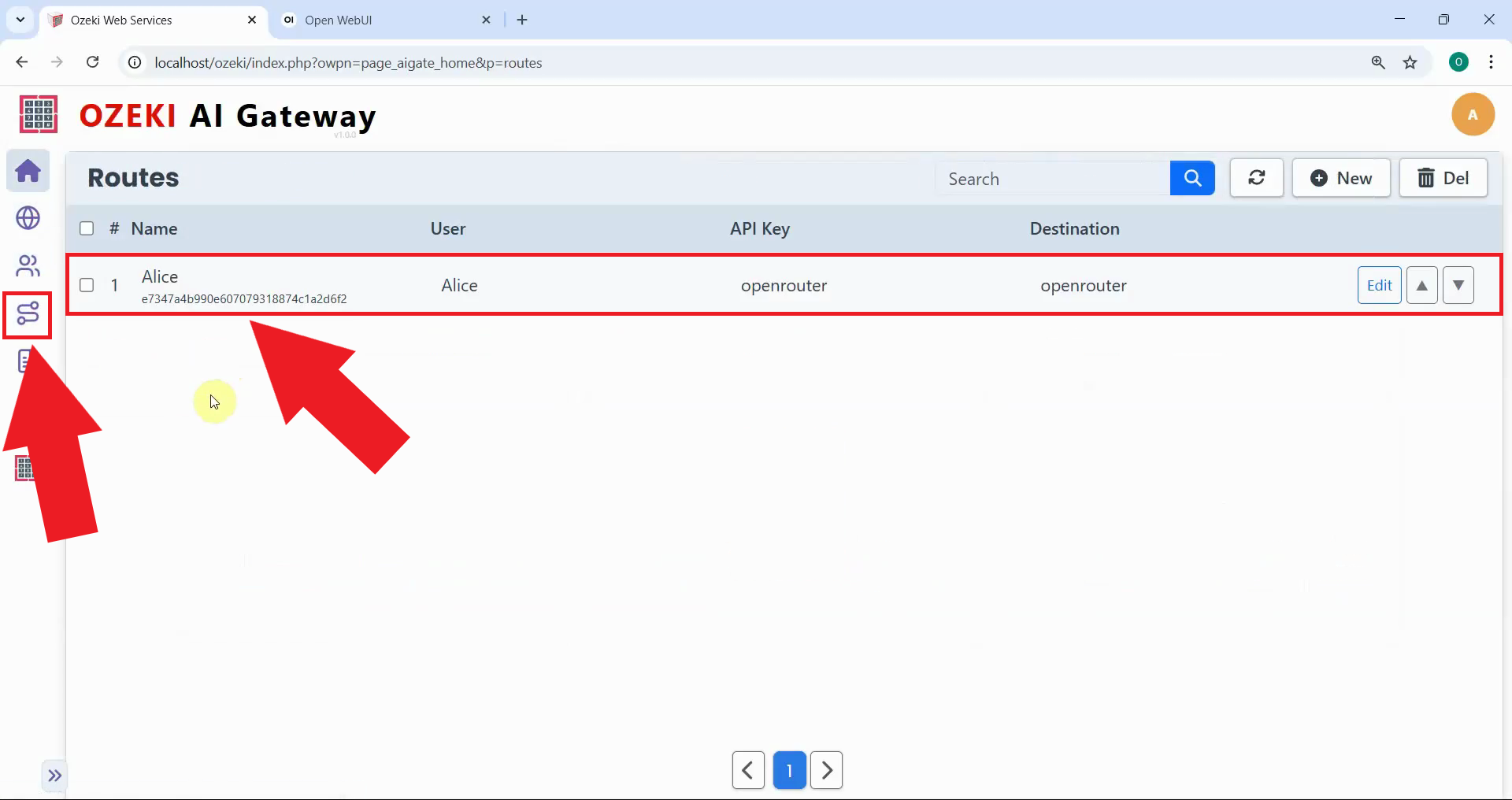

Step 3 - Validate routes

Click "Routes" on the sidebar and verify that you have a route connecting your Ozeki user to the "openrouter" provider group (Figure 3).

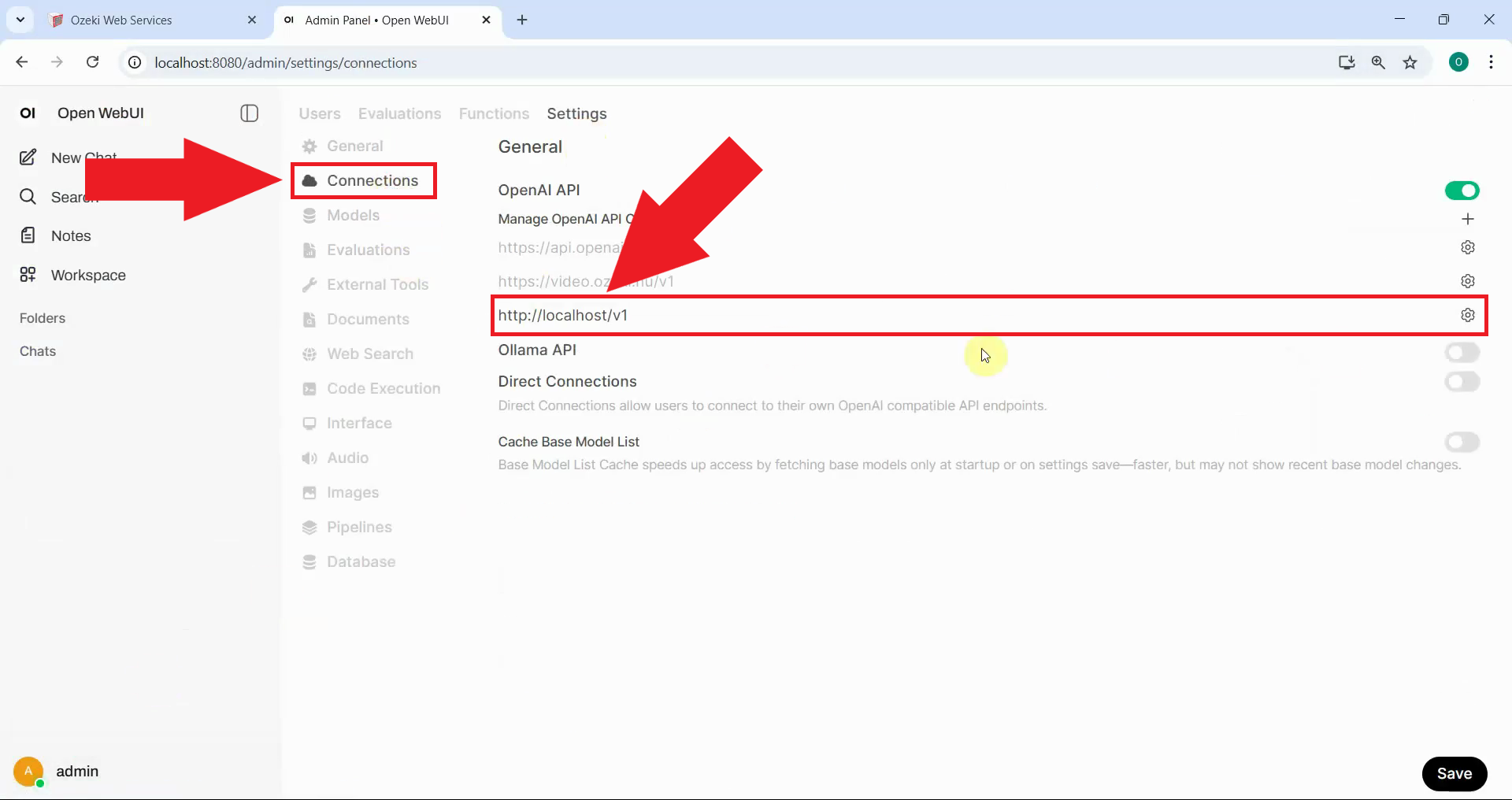

Step 4 - Confirm Open WebUI connections

Switch to your Open WebUI interface and open the Admin Panel. Navigate to Settings > Connections to verify that your LLM API endpoint URL connection is properly set up and active (Figure 4).

If you haven't set up Open WebUI, please check out our How to Setup OpenWebUI in Windows guide.

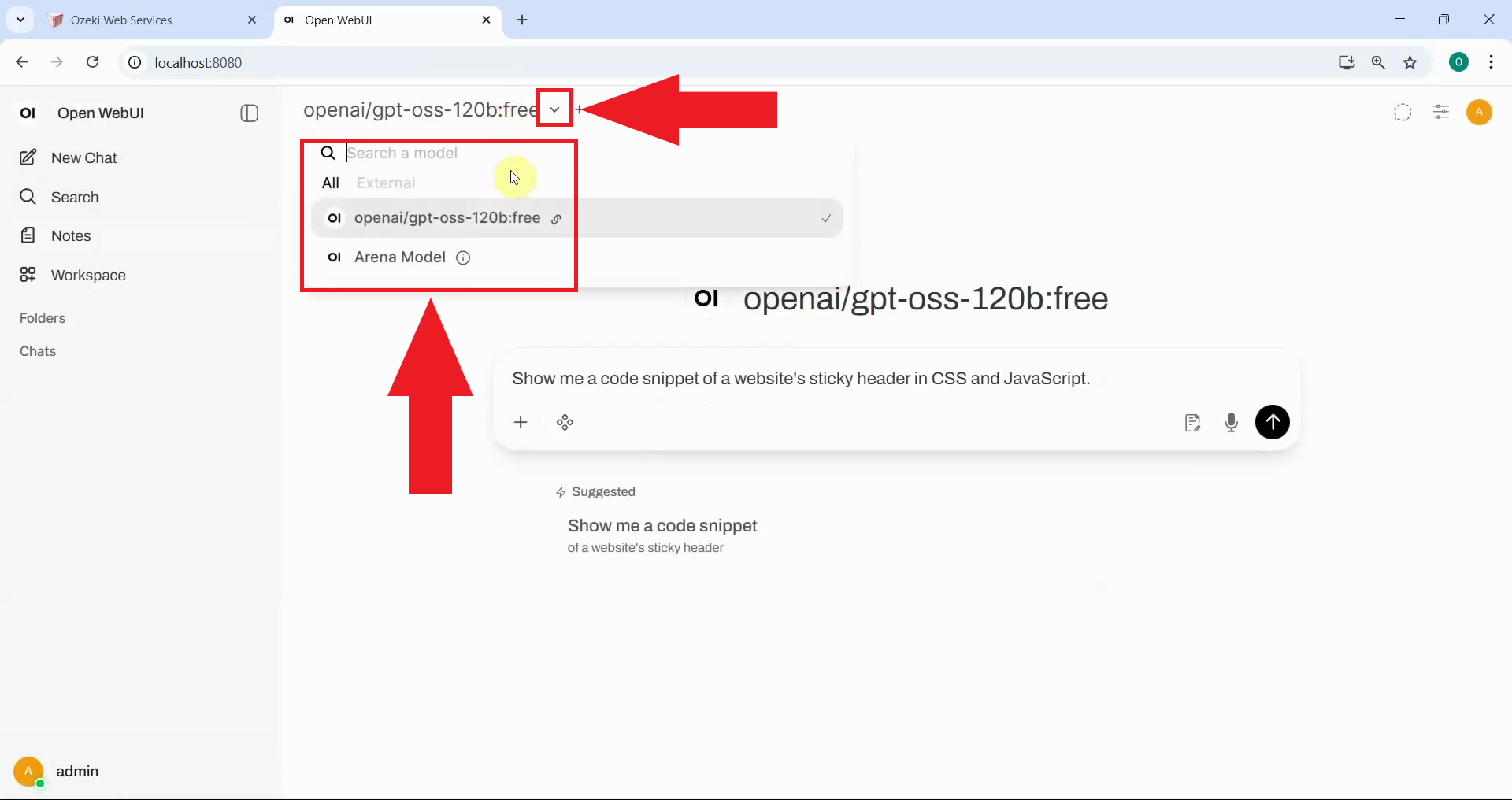

Step 5 - Check Open WebUI models

In Open WebUI, open the model selector dropdown and note which models are currently available. This step establishes your baseline before making changes to the model selection (Figure 5).

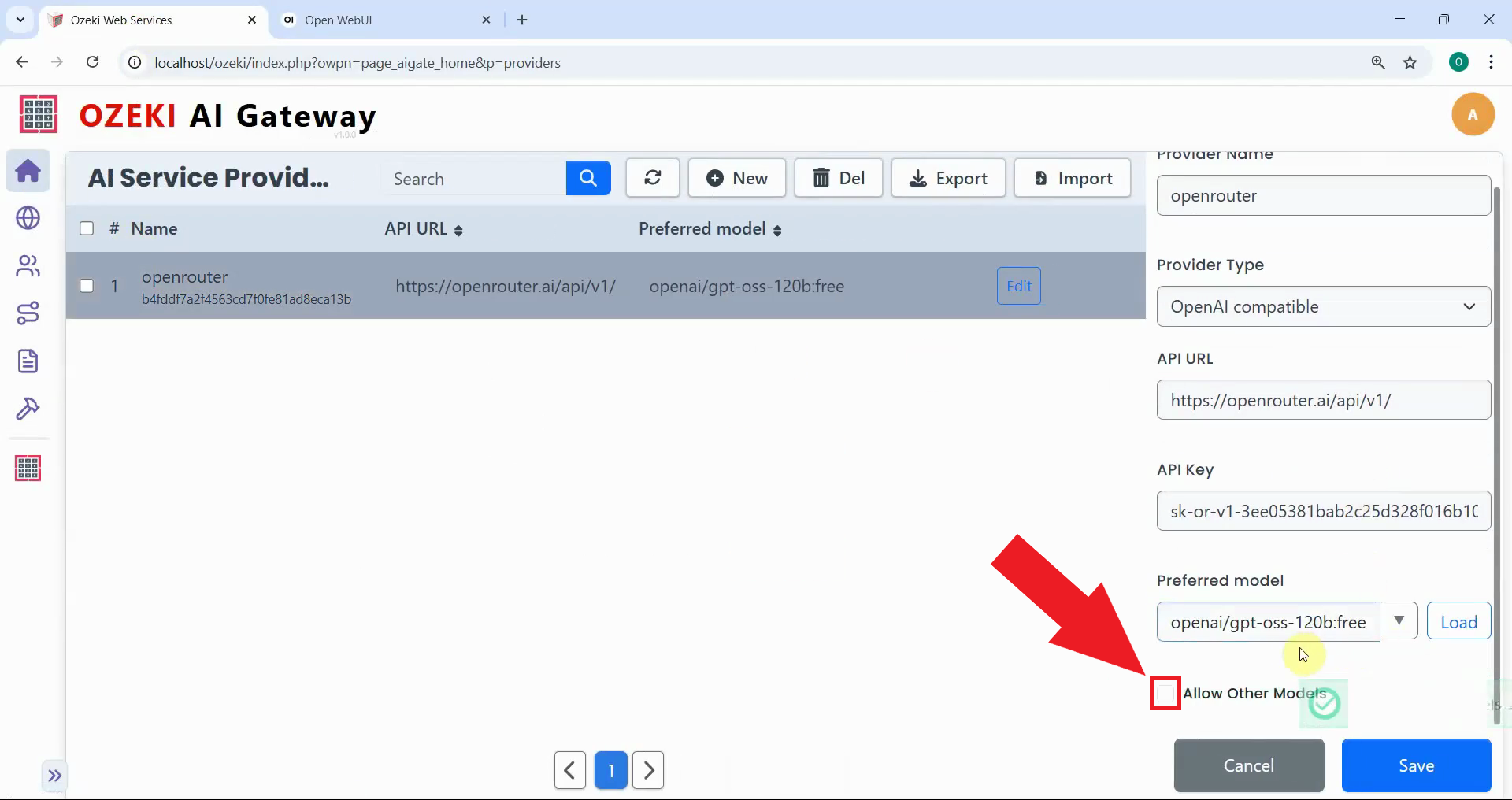

Step 6 - Edit provider

Return to Ozeki AI Gateway and navigate to the Providers section. Locate your "openrouter" provider and click the edit button. This will open the provider configuration panel (Figure 6).

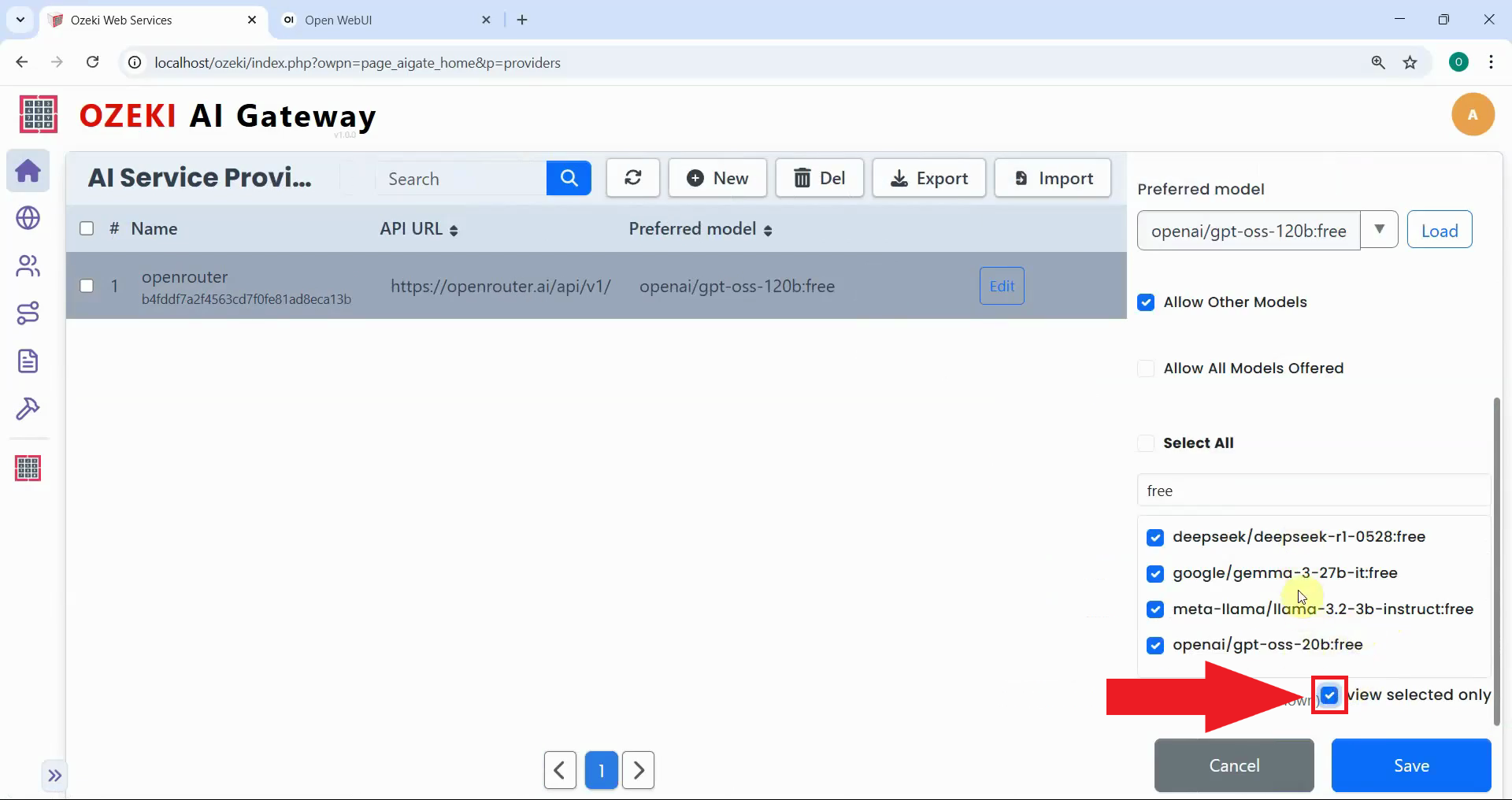

Step 7 - Toggle allow other models

In the provider configuration panel, locate the "Allow other models" checkbox. Enable this option to access the model selection list (Figure 7).

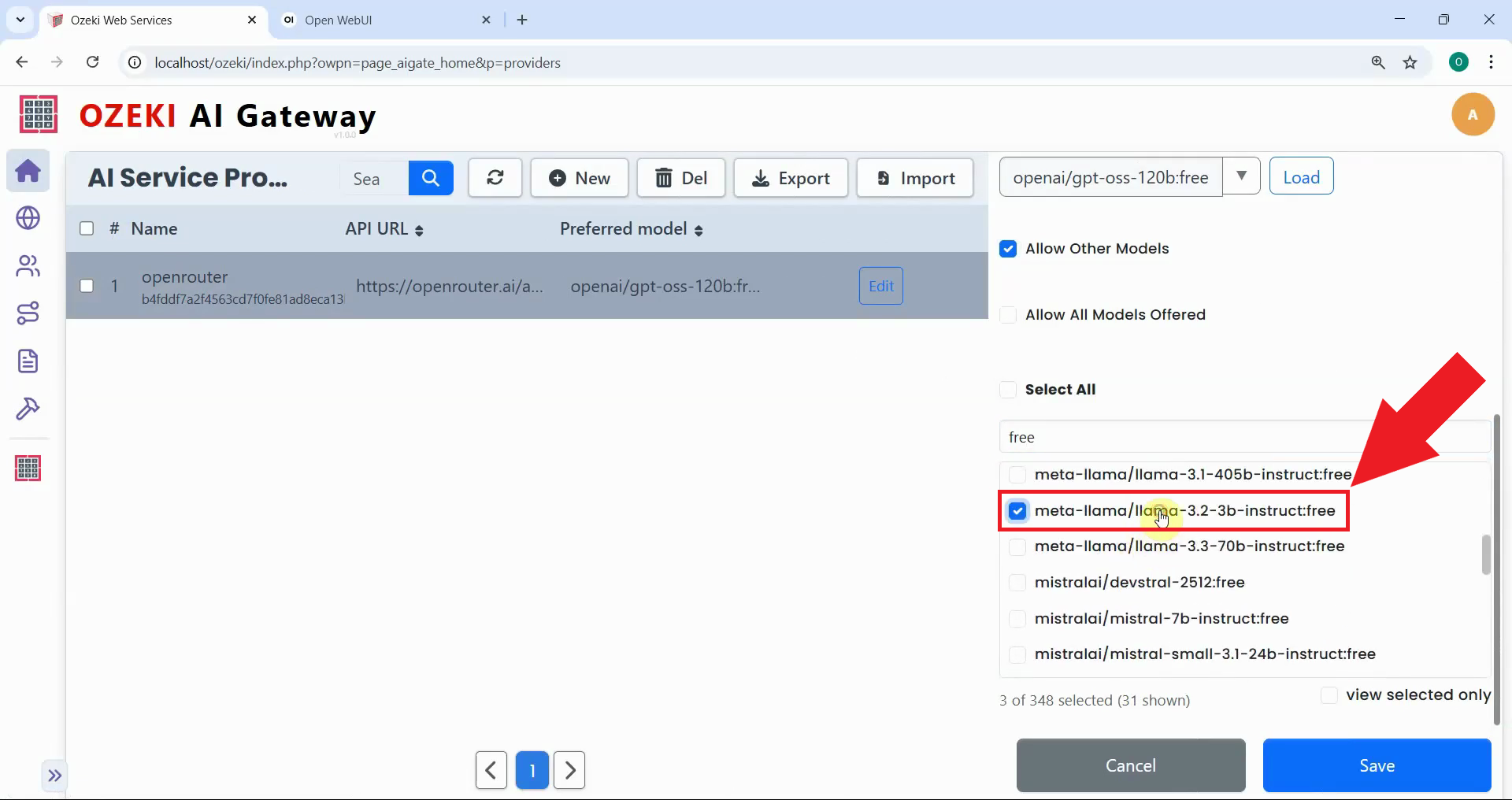

Step 8 - Select models

A list of available models from OpenRouter will appear. Browse through the list and select the models you want to use by checking their corresponding checkboxes (Figure 8).

Step 9 - View selected models (Optional)

After selecting your models, review the list of checked models to confirm your selections (Figure 9).

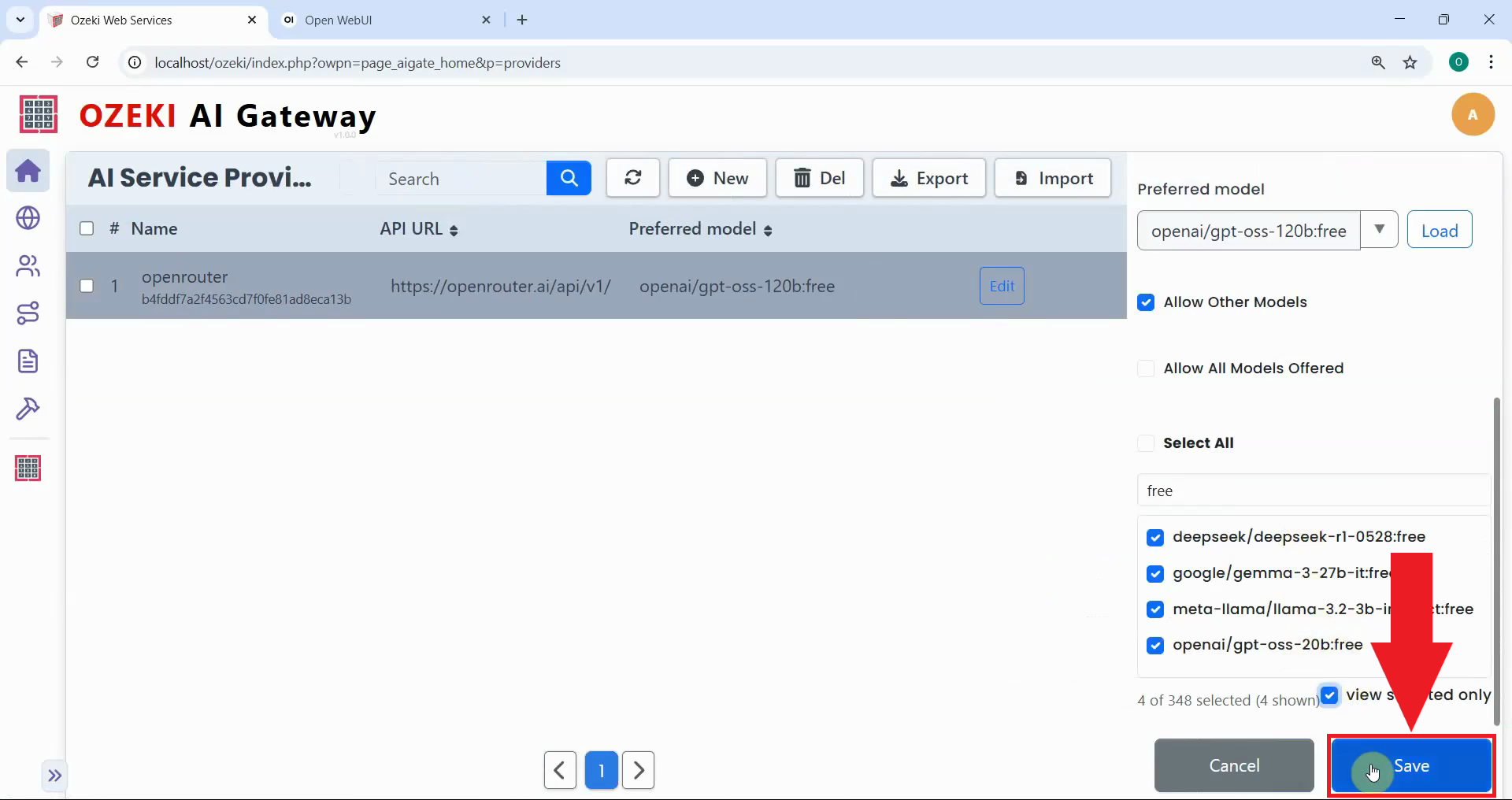

Step 10 - Save selection

Click the "Save" button to save your provider configuration with the selected models (Figure 10).

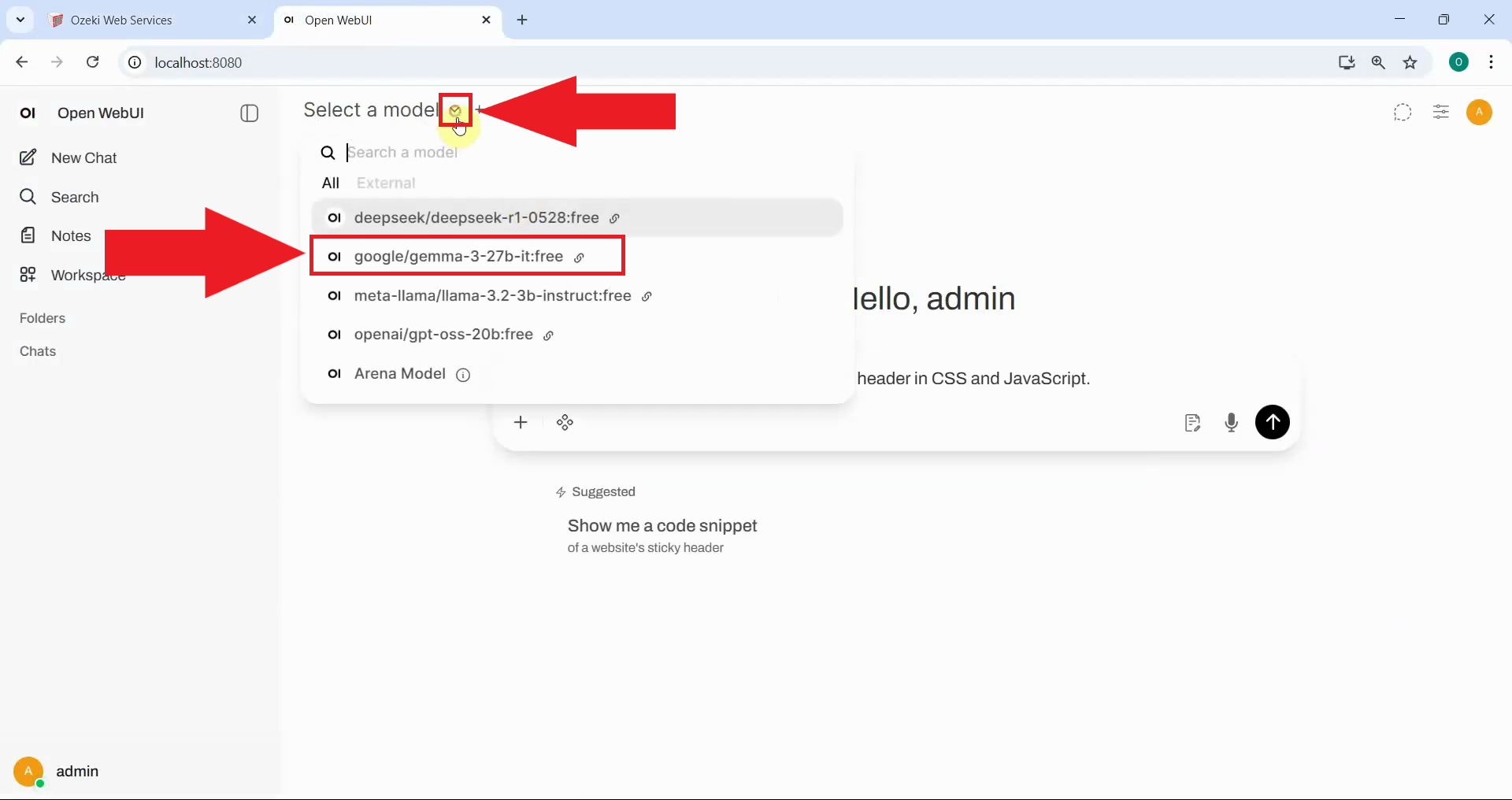

Step 11 - Select a model in Open WebUI

Return to Open WebUI and open the model selector dropdown menu. You should see the models you configured in Ozeki AI Gateway (Figure 11).

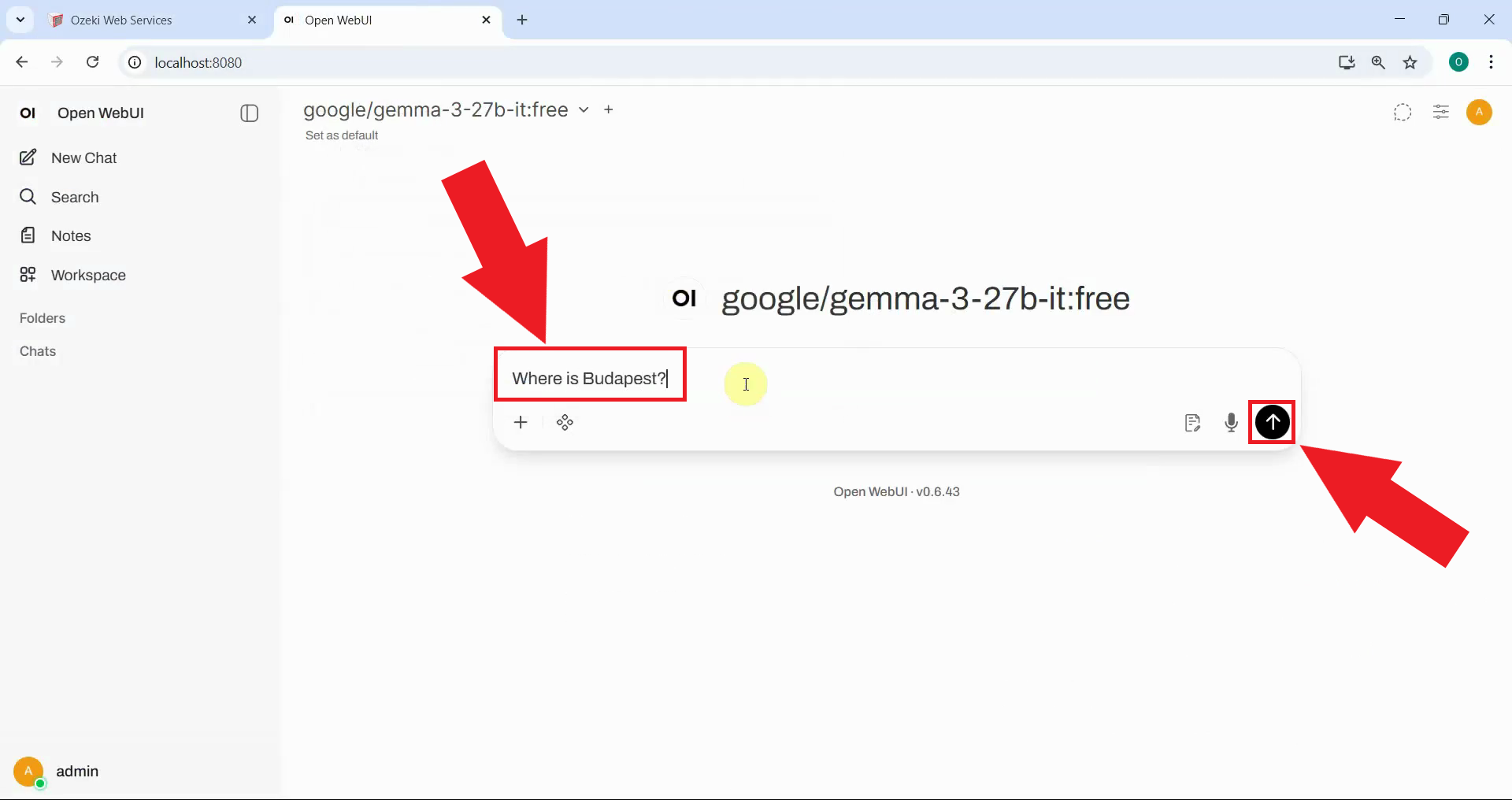

Step 12 - Test model

To test the LLM connection, type a question in the chat textbox and press Enter or click the send button (Figure 12).

Step 13 - Response received

You should see the AI's response appear in the chat window, confirming that Open WebUI is successfully connected to your LLM API and working as expected (Figure 13).

Conclusion

You have successfully configured model selection in Ozeki AI Gateway, giving you control over which AI models are available to users. You can return to the provider configuration at any time to add or remove models as your needs change, making this a flexible solution for managing AI model access.